About the Project

Designing International Law and Ethics into Military Artificial Intelligence (DILEMA)

The DILEMA project explores interdisciplinary perspectives on military applications of artificial intelligence (AI), with a focus on legal, ethical, and technical approaches on safeguarding human agency over military AI. It analyses in particular subtle ways in which AI can affect or reduce human agency, and seeks to ensure compliance with international law and accountability by design.

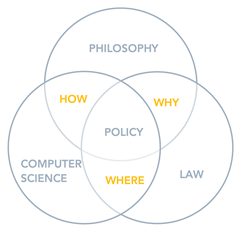

An interdisciplinary research team works in dialogue and together with partners to address the ethical, legal, and technical dimensions of the project. First, research will be conducted on the foundational nature of the pivotal notion of human agency, so as to unpack the fundamental reasons why human control over military technologies must be guaranteed. Second, the project will identify where the role of human agents must be maintained, in particular to ensure legal compliance and accountability. It will map out which forms and degrees of human control and supervision should be exercised at which stages and over which categories of military functions and activities. Third, the project will analyse how to technically ensure that military technologies are designed and deployed within the ethical and legal boundaries identified.

Throughout the project, research findings will provide solid input for policy and regulation of military technologies involving AI. In particular, the research team will translate results into policy recommendations for national and international institutions, as well as technical standards and testing protocols for compliance and regulation.

The project is funded by the Dutch Research Council (NWO) Platform for Responsible Innovation (NWO-MVI), project number MVI.19.017. It started in September 2020 and will run for four years.