Implementing International Responsibility for Artificial Intelligence in Military Practice (I2 RAMP)

Research project (2021-2024)

Researcher: Dr Magdalena Pacholska

The I2 RAMP examines how to conceptualise and implement international responsibility for violations of human rights and international humanitarian law resulting from the use of military AI.

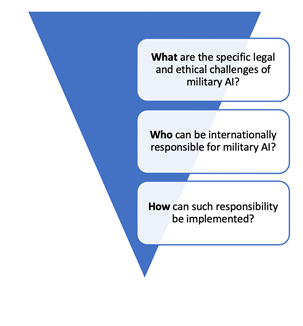

During the project, Magdalena Pacholska will work closely with the team of the DILEMA project to comprehensively analyse the ethical and legal challenges military AI raises. This will result in both peer-reviewed academic output and viable operational guidance. To address this overall aim, the project comprises three, interlinked, research questions:

The first research question establishes the specific legal and ethical challenges AI raises in the military realm with a view to determining whether they are unique enough to treat AI-based military equipment differently from other technologically advanced weapons systems.

The first research question establishes the specific legal and ethical challenges AI raises in the military realm with a view to determining whether they are unique enough to treat AI-based military equipment differently from other technologically advanced weapons systems.

The second research question examines who can bear international responsibility for the wrong done with the use of military AI, and analyses the legal avenues that would allow for holding both individuals and States responsible.

The third research question inquires how such responsibility can be implemented and sets forth practical guidelines, which would assist states or military commanders in preventing breaches of international humanitarian law (IHL) or to mitigate the consequences of harm done, should such breaches occur.

|

This project has received funding from the European Union’s Horizon 2020 research and innovation programme under the Marie Skłodowska-Curie grant agreement No 101031698. It started in September 2021 and will run for three years.

|

|